Coding is a language; it is a way to communicate with computers. Coding is one of the essential skills in the world today. It’s not just a tool for developers; it’s for people who want to make their lives easier and more efficient.

There are many different types of coding, and they can be used in almost any field. From software development to web design and product management, coding is integral to modern businesses function. You can do coding in different ways.

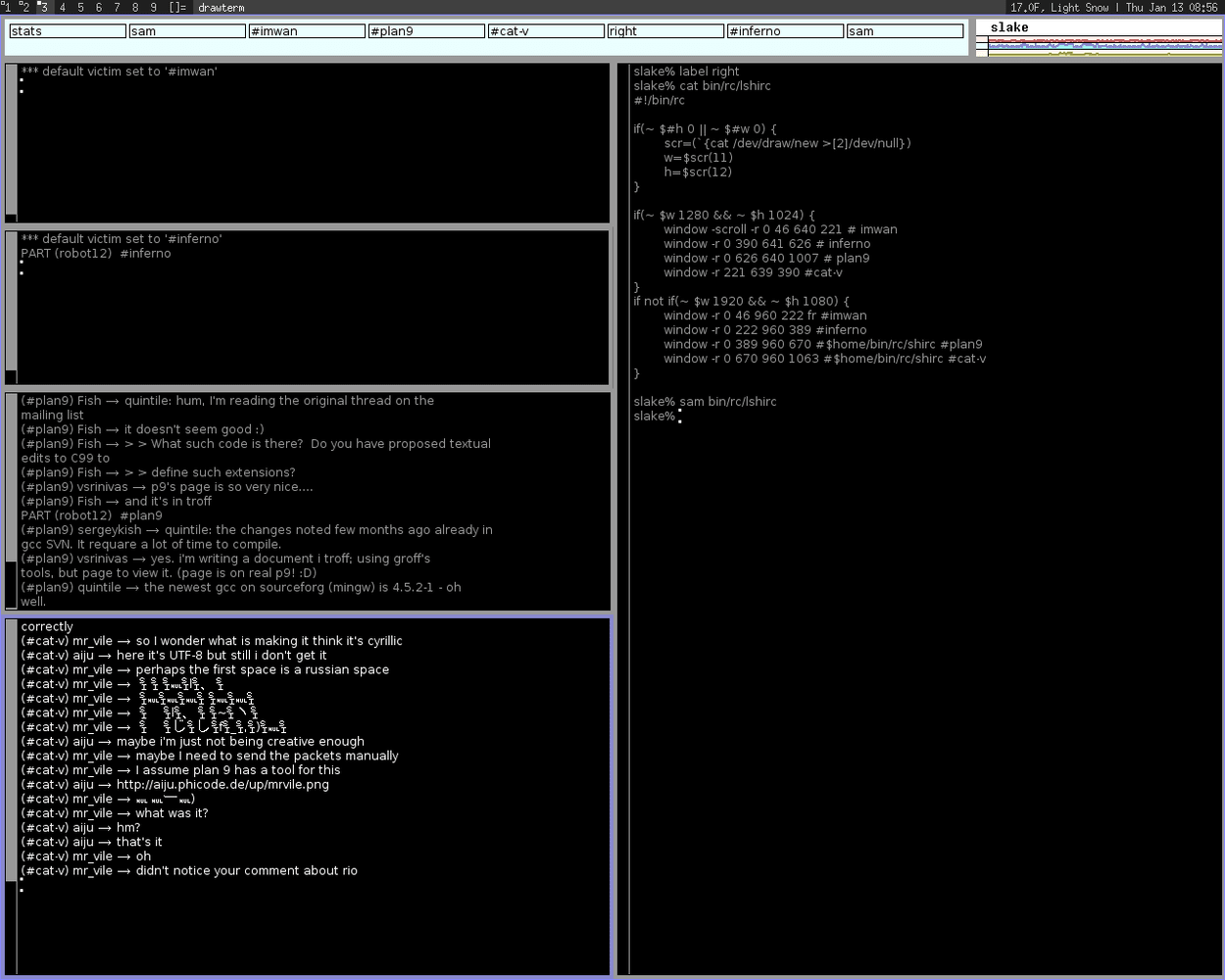

Unicode and UTF-8 are two different character encodings. Unicode is a standard that describes the standard character set used on computers, while UTF-8 is an encoding format for text files.

The main difference between Unicode and UTF-8 is that Unicode uses a fixed character set, while UTF-8 uses variable length. This means that UTF-8 can represent more than one character at a time (as opposed to only one), while Unicode has to be able to encode every character in its little box.

Let’s discuss these encodings in detail.

What Is Unicode?

Unicode is a universal character encoding standard. It’s a way of mapping characters to numbers so that all computers can understand the exact text.

It’s a way of ensuring that the same code can represent characters from one language in another.

It was created by the Unicode Consortium, a non-profit organization comprising many different companies, including Apple and Google. Most major software companies, including Microsoft and Apple, have adopted the Unicode standard.

What Is UTF-8?

UTF-8 is a standard for encoding Unicode characters. It’s a Unicode Transformation Format, which allows you to convert a sequence of bytes into a series of characters.

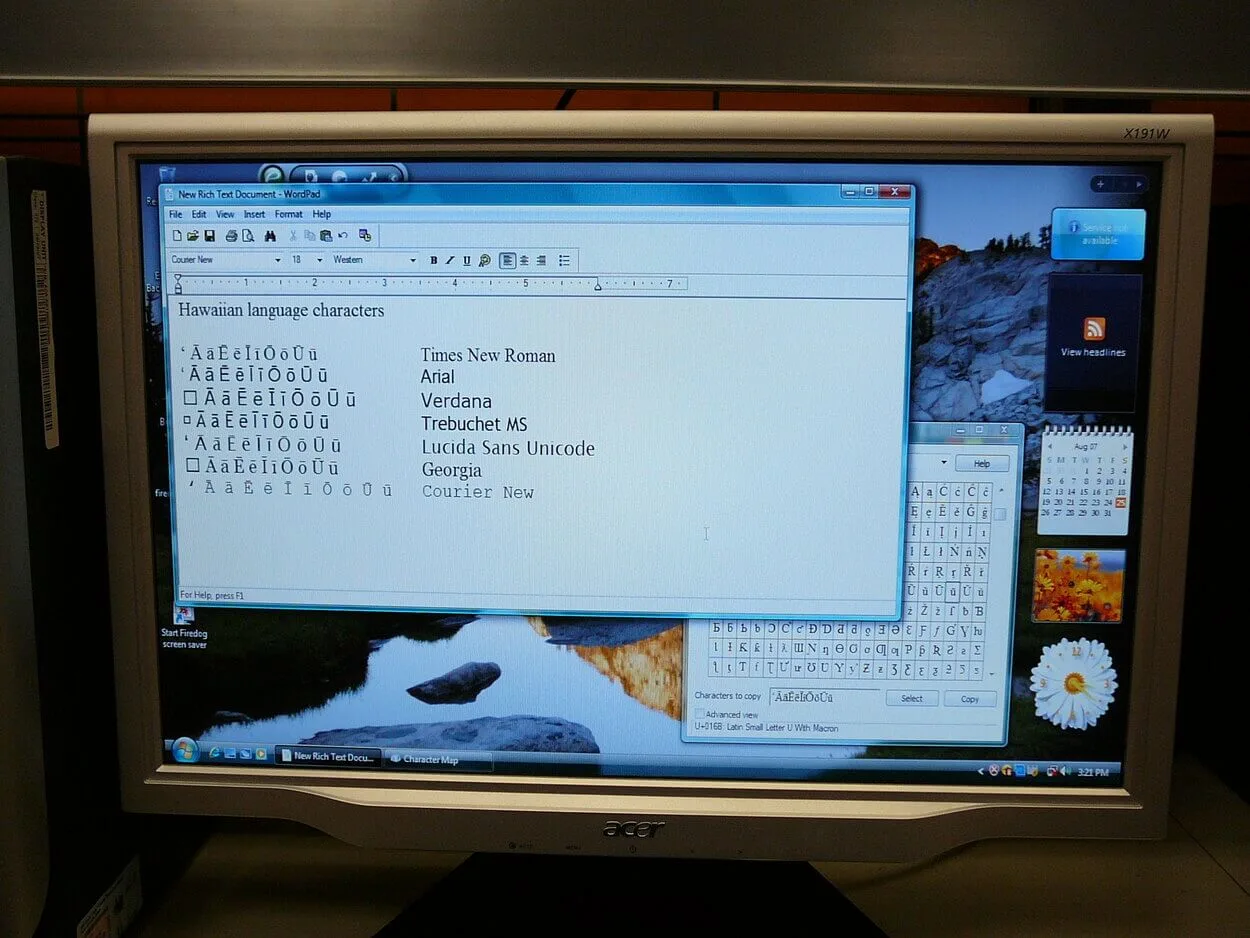

It’s an extension of the earlier code page standards (such as CP437) and includes the ability to encode characters from almost all languages in use today.

This can be useful because it means you can define the same letter with other numbers in different languages. You can use one set of characters to display text in multiple languages.

Difference Between Unicode And UTF-8

Unicode and UTF-8 are both standards for encoding text. The main difference between Unicode and UTF-8 is that Unicode contains all possible code points (for example, it includes characters from Japanese, Chinese, Cyrillic script, etc.). At the same time, UTF-8 only supports some code sets of Unicode (for example, ASCII).

- UTF-8 is a variable-width encoding, while Unicode is a fixed-width encoding.

- UTF-8 is designed to be backward compatible with ASCII, while Unicode isn’t.

- Unicode uses 2 bytes to encode every character, while UTF-8 uses 1 byte to encode most characters and 2 bytes for special characters.

- Unicode contains more than 100,000 characters, while UTF-8 contains only 65,536 characters (although it can be extended).

- Unicode is case sensitive (i.e., “A” and “a” are different), while UTF-8 isn’t case sensitive (i.e., “a” is the same as “A”).

- UTF-8 is easier to understand because it is more straightforward than Unicode.

- Unicode supports upper and lower case letters (0x00 through 0x00FF); UTF-8 only supports upper case letters (0x0000 through 0xFFFF).

You can also understand these differences by looking at this table.

| Unicode | UTF-8 |

| It uses a fixed character set. | It uses variable character length. |

| It is pretty complex. | It is pretty easy to understand. |

| It supports both upper and lower case letters. | It only supports uppercase letters. |

| It is case-sensitive. | It is not case-sensitive. |

| It contains more than 100,000 characters. | It has only 65,536 characters. |

Here is a video clip explaining the differences between Unicode and UTF-8.

Why Is It Called UTF-8?

UTF-8 is a character encoding that supports various languages, including Chinese, Japanese, and Korean. It’s called UTF-8 because it uses 8 bits to encode each character.

The word “UTF” stands for Unicode Transformation Format, developed by the Unicode Consortium to standardize how characters are stored in computer systems. UTF-8 is a version of the Unicode Transformation Format, explicitly designed to be compatible with ASCII text and support all of the world’s major languages.

Why Do We Use UTF-8 Encoding?

We use UTF-8 because it allows us to support more languages and characters than ASCII, the standard ASCII encoding. This means that when our users send us data, we can use UTF-8 to ensure that their characters are included in the text they send us.

What Are The Uses Of Unicode?

Unicode is a standard for the consistent encoding, representation, and handling of text. It provides a unique number for every language character, allowing computers to handle text consistently and efficiently.

Some of the uses of Unicode include:

- Encoding text, including emojis

- Standardizing character names across different applications

- Adding characters to existing fonts

UTF-8 or Unicode: Which One is Better?

Unicode is better than UTF-8 for several reasons.

Firstly, Unicode is the only character set that allows you to represent every possible character in any language or script used on Earth. This is important because it means you won’t need multiple encodings to support different languages.

Additionally, Unicode has a more extensive range of code points than UTF-8, providing more information about the characters being encoded. This means that Unicode can encode more characters and symbols than UTF-8.

Lastly, there is no ambiguity in how Unicode encodes each character—each has exactly one unique codepoint. In contrast, there are multiple ways to encode some characters in UTF-8 (e.g., “ö” can be encoded as either 0xF6A4 or 0xF6A5).

How Many Bits Is Unicode?

Unicode utilizes two encoding forms: 8-bit and 16-bit, depending on the data being encoded.

The most common encoding form in Unicode is 8-bit, which can encode up to 256 characters. This means that any single character can be represented by one byte (8 bits) of information.

Unicode’s second encoding form is a 16-bit format, which can encode up to 65536 characters. This means that any single character can be represented by two bytes (16 bits) of information.

How To Convert From Unicode To UTF-8?

The Unicode standard was developed to provide a unique number for every character in all languages, and it’s widely used as the standard for encoding text. UTF-8 is a type of Unicode encoding that allows you to convert between different encodings of Unicode.

To convert from Unicode to UTF-8, you must first convert your text from UTF-16 to UTF-32. Then, take each 16-bit word from your UTF-32 string, and replace it with its corresponding code point in ASCII. Finally, split your string into 8-bit bytes and store them in an array.

Bottom Line

- Unicode and UTF-8 are two different standards for encoding characters.

- Unicode has a more extensive range of characters, while UTF-8 allows fewer characters to be displayed.

- Unicode is a standard that supports all the characters used in any language on Earth.

- At the same time, UTF-8 is a standard that supports only those characters that occur frequently enough in Western languages.

- Unicode is more beneficial for international communication, while UTF-8 is more useful for sharing documents between people who speak different languages.

- Unicode supports over 100,000 different characters; in contrast, UTF-8 only supports about 65,000 characters.